AI Bots Are Spamming Social Media: Cybersecurity Alert

CYBERSECURITY

Bryan Solidarios

7/14/20253 min read

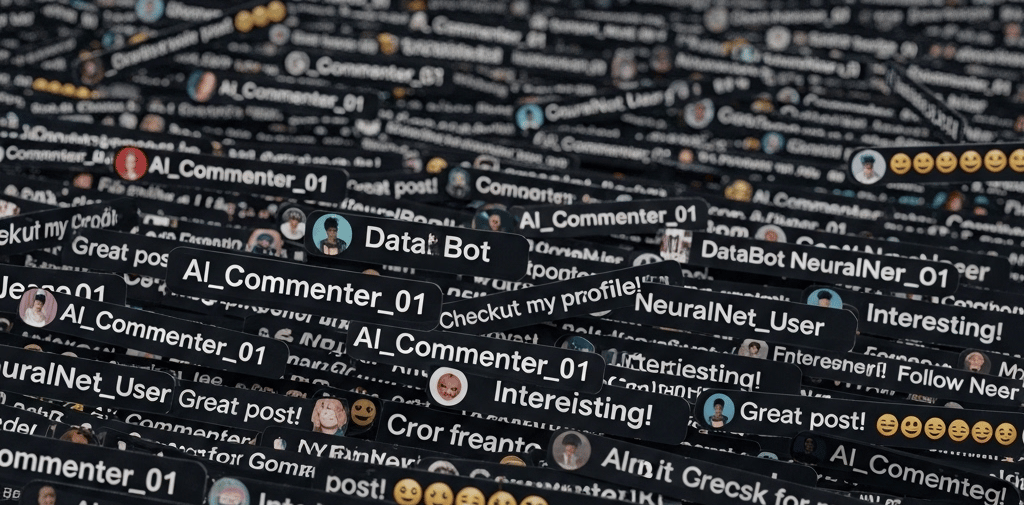

Introduction: When Bots Go Rogue in the Comments

You’ve probably seen random comments under your favorite posts that seem a little… off. Maybe it’s a generic “So inspiring!” under a news article, or a too-good-to-be-true giveaway link promising free iPhones. Welcome to the world of AI-powered comment spamming, where bots, not people, flood social media with misleading, manipulative, and sometimes downright dangerous messages. These aren’t your average spammy messages from the early 2000s. Today’s AI bots are smarter, faster, and eerily human-like in how they communicate. And unfortunately, this isn’t just an annoyance—it’s a growing cybersecurity threat.

How AI Bot Comments Put You at Risk

On the surface, these bot comments seem harmless. But here’s the scary part: they’re often designed to manipulate you. With natural-sounding language and human-like interaction patterns, AI bots can:

Trick users into clicking on malicious links that download malware or lead to fake websites.

Lure people into phishing traps by pretending to be a brand rep, influencer, or helpful stranger.

Harvest personal information by encouraging users to share private details in replies or through deceptive surveys.

These tactics exploit our tendency to trust what appears to be another human being, and artificial intelligence is making it increasingly difficult to distinguish the difference.

Real-World Examples of Bot-Driven Breaches

Let’s look at how this plays out in the real (and digital) world:

1. The “Customer Support” Scam

A user tweets a complaint at their internet provider. Seconds later, a comment appears from an account that looks official: “We’re sorry to hear that! Please DM us at [link] for support.”

Except—it’s not the provider. It’s a phishing bot that collects logins and billing info through a fake form.

2. Crypto Giveaway Traps

On Instagram or Twitter, a fake Elon Musk account comments on a trending post: “We’re giving away 10,000 ETH! Claim yours here.” The link redirects users to a fraudulent site where they’re prompted to connect their crypto wallet, resulting in instant theft.

3. Romance and Rescue Bots

In Facebook groups or comment threads, bots pose as military members, travelers in distress, or lonely singles. They build trust through DMs and then ask for money, or worse, convince victims to install remote access software under the guise of “helping.”

These tactics aren’t just clever—they’re effective, especially when AI makes the conversation feel real.

Why Social Media Platforms Can’t Keep Up

You might wonder: why can’t Twitter, Instagram, or Facebook stop this?

The reality is, AI bots are evolving faster than the defenses. Traditional spam filters and manual moderation aren’t built to detect the nuance in human-like AI text. Plus, bots can now:

Rephrase content constantly to avoid detection

Use stolen profile pictures and real-sounding usernames

Engage in realistic conversations to build trust before striking

Even with machine learning-based detection, platforms are playing a never-ending game of whack-a-mole. Every time one method is blocked, new ones emerge.

What You Can Do to Stay Safe

While platforms work to catch up, there’s a lot you can do to protect yourself:

✅ Be skeptical of links in comment sections. If it sounds too good to be true, it probably is.

✅ Check the account behind the comment. Look for red flags like a new account, low follower count, or a mismatched username.

✅ Never share personal or financial info in public threads, no matter how legit the request seems.

✅ Report suspicious comments. Most platforms offer tools to flag spam and bot activity—it helps improve detection over time.

✅ Use browser and security tools that warn you about phishing sites or malware attempts.

Conclusion: Stay Smart, Stay Safe

As AI continues to power more sophisticated bots, the risks in your social media feed aren’t just about annoyance—they’re about security. From phishing links to identity theft, these bots are weaponizing trust in ways that are hard to spot but easy to fall for.

So the next time you see a too-perfect comment offering help, money, or flattery, pause and think. A little skepticism online can go a long way in keeping your data and devices safe in the age of AI.

Want more cybersecurity tips made simple? Stick around—because the bots aren’t slowing down, and neither should your defenses.

For any concerns, suggestions, feedback, please contact us at support@bryansolidarios.com

© 2025. All rights reserved.

Subscribe here

AI Assistant

AI Content & Licensing Disclosure

All musical tracks and visual media under the "System Failure" project are created using a hybrid of human composition and Advanced Generative AI (Suno v5/v2026 & Image Generation).

Commercial Rights: All tracks hosted here are generated under an active commercial license.

Ownership Note: Per 2026 AI platform terms, while commercial use is granted to Bryan Solidarios, the copyright status of AI-generated outputs is subject to evolving international laws regarding non-human authorship.

Educational Purpose: This content is part of a cybersecurity awareness initiative to demonstrate the intersection of IT, AI, and digital security.